Current: state of conclusion

Your Experiments Taught You:

🎯 Better Data/Features >>> Better Architecture >> Better Hyperparameters Proof from your results:

- Adding features: +26.7%

- Tuning hyperparameters: +0-2% Features are 13-26× more impactful!

Current approach treats this as a simple classification problem, but it’s actually a spatial reasoning + temporal planning problem.

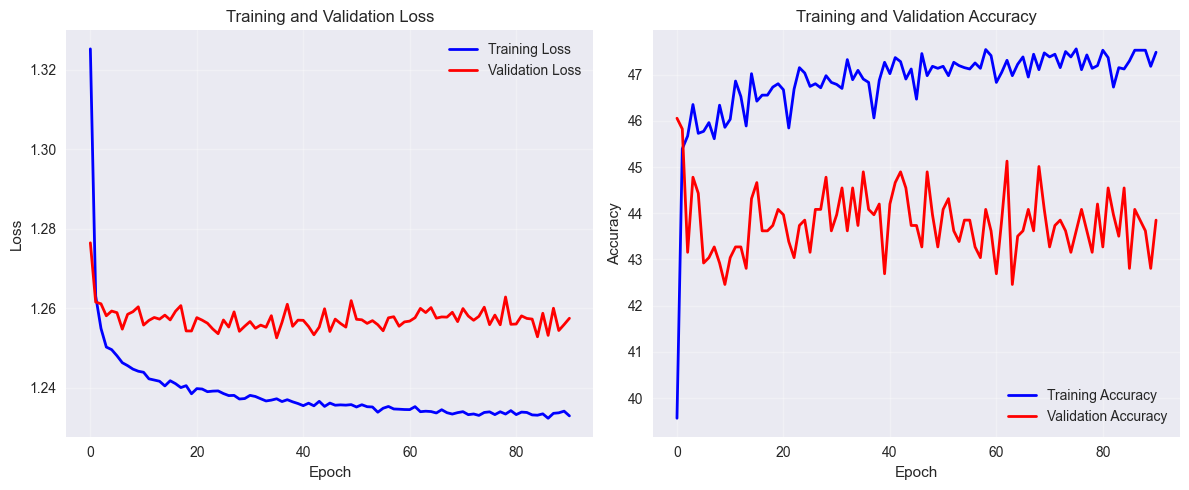

Problem 1: Training accuracy stuck at 49.5% and training stopping at epoch 26? Why?

Validation loss stopped improving after epoch 10 and actually got worse at epoch 20. With a patience of 15 epochs, the model waited 16 epochs (from epoch 10 to epoch 26) without improvement, triggering early stopping.

How this is current training data looks like for more info refer 2. Training Data

│ Sample 0: [0,1,0,0,0,1,0,1,0] │ ← 9 features per sample 3×3 View along A* path

│ Sample 1: [0,1,0,0,0,1,0,0,0] │ ← 9 features per sample 3×3 View along A* path

🚨 Root Cause 1:

Learning Rate Too High: 0.001 might be causing the model to overshoot optimal weights Small Dataset: Only 698 training samples for 2,852 parameters (2.5 parameters per sample is borderline) High Validation Loss: 1.26+ suggests the model is struggling to learn the patterns Low Accuracy: ~43-49% accuracy indicates the model isn’t learning effectively

🎯 Things to Try next

Experiment 1:

training:

learning_rate: 0.0005 # Reduce learning rate

early_stopping:

patience: 25 # Increase patience

min_delta: 0.0001 # More sensitive to small improvements

model:

dropout_rate: 0.1 # Reduce dropout slightly

Experiment 1 Results ⇒ After above changes early stopping at 51 epoch and accuracy reached to 51%

Conclusion: Not much improvements from experiment 1

Experiment 2: Increased training data from 810 to 8100 samples (Large data set).

Experiment 2 Results ⇒ After above changes early stopping at 51 epoch and accuracy reached to 51%

Conclusion: Not much improvements from experiment 2

🚨 Root Cause 2:

Possible Problem: Insufficient Information in 3x3 Window Looking at data generation:

- A* pathfinding works on the full 10x10 environment with complete information

- Robot perception is limited to a 3x3 window around current position

- The neural network must predict the next A* action based only on the 3x3 view

Possible solution for 10x10 environment and 3x3 perception matrix.

- Added wall padding to 10x10 environment

- Added goal coordinates relative vector dx, dy at every step. In training data.

- Removed memory history from implementation

(state) = (local_view, goal_delta)

(label) = expert action (from A*)

- Add evaluation to find if robot reach the final goal, that will be after training and validation step.

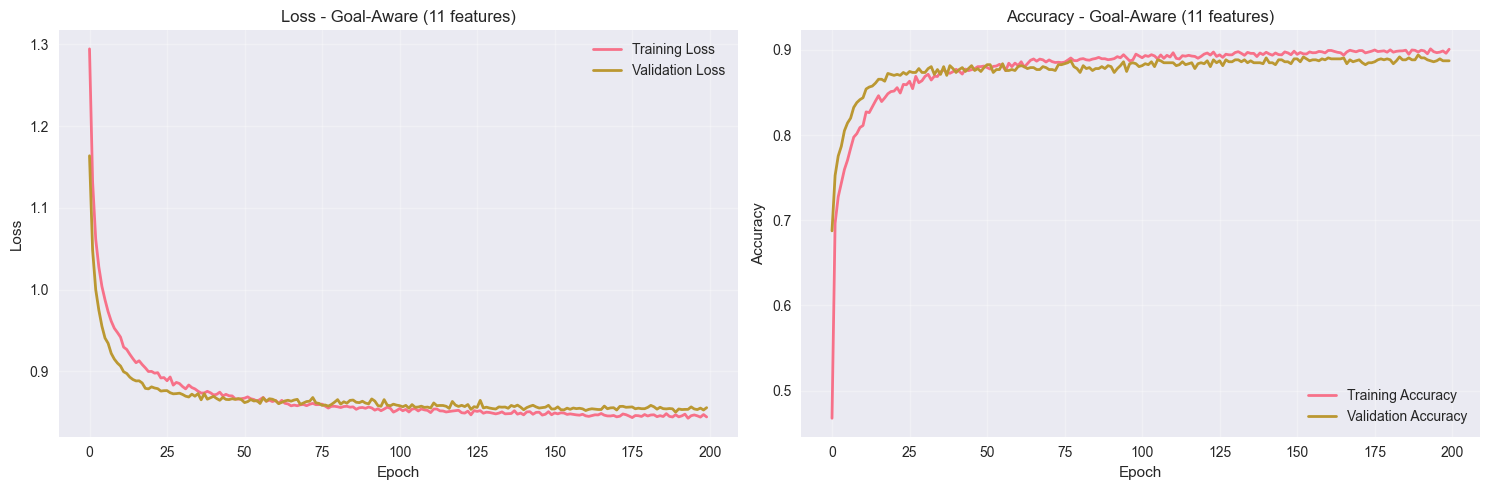

📊 Training Analysis:

Training Accuracy: 0.9007 (90.1%)

Validation Accuracy: 0.8871 (88.7%)

Overfitting Gap: 0.0136 (1.4%)

Experiment 2: Why changing NN 🧠 Architecture: from 11 → 64 → 32 → 4 to 11 → 128 → 64 → 4 didn’t improve the accuracy?

🛠️ SOLUTIONS TO IMPROVE ACCURACY:

Solution 1: Add Memory/History

Instead of just 3x3 perception, include recent movement history:

Training Data Transformation

Current Training Data Structure:

Input: [0, 1, 0, 1, 1, 0, 0, 1, 0] # 3x3 perception (9 features)

Output: [2] # Action (LEFT)

ENHANCED (With Memory):

Input: [0, 1, 0, 1, 1, 0, 0, 1, 0, # 3x3 perception (9 features)

0, 0, 0, 1, # Last action: UP (one-hot)

0, 1, 0, 0, # 2nd last action: DOWN (one-hot)

1, 0, 0, 0] # 3rd last action: LEFT (one-hot)

Output: [2] # Action (LEFT)

A* path through environment:

path = [(0,0), (1,0), (1,1), (2,1), (2,2), (3,2), (4,2), (5,2), (6,2), (7,2), (8,2), (9,2), (9,3), (9,4), (9,5), (9,6), (9,7), (9,8), (9,9)]

Actions along this path:

actions = [DOWN, RIGHT, DOWN, RIGHT, DOWN, RIGHT, RIGHT, RIGHT, RIGHT, DOWN, DOWN, DOWN, DOWN, DOWN, DOWN, DOWN]

🔍 Training Analysis:

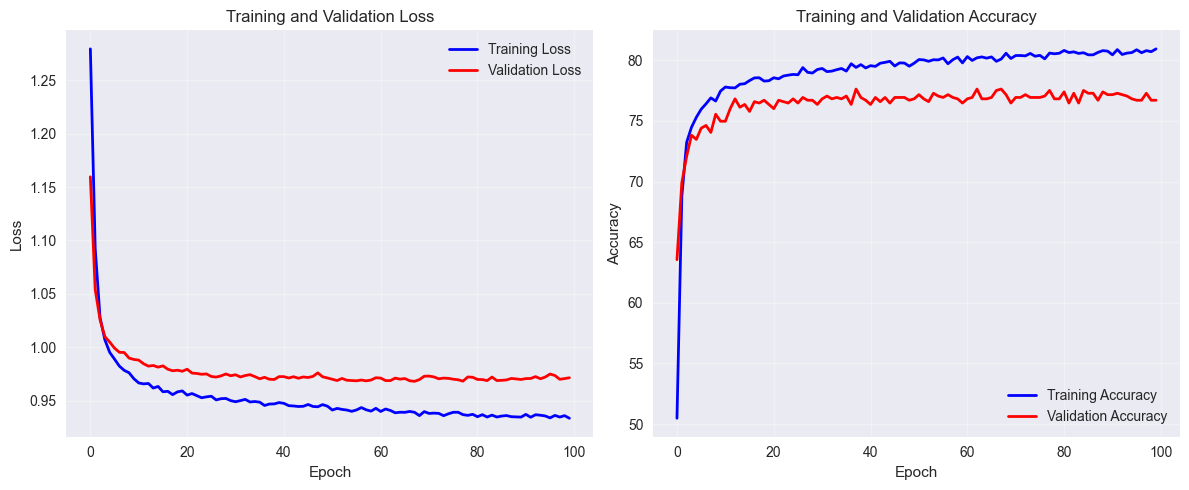

Training Accuracy: 80.9380 (8093.8%)

Validation Accuracy: 76.7013 (7670.1%)

Overfitting: 4.2367 (423.7%)

⚠️ Warning: Significant overfitting detected!

Conducted different experiments as mentioned in 6. Hyperparameter Tuning Guide but not further improvements in training accuracy.

Conducted different experiments as mentioned in 6. Hyperparameter Tuning Guide but not further improvements in training accuracy.

Your 23.3% error (100% - 76.7%) comes from:

├─ 15-17%: Missing Information (goal, global view)

│ → Fix with better features

│

├─ 3-5%: Architecture Limitations

│ → Fix with better model design

│

└─ 4%: Overfitting/Variance

→ Fix with hyperparameters (what you tried!)

You can only improve the last 4% with hyperparameters!

For more details refer 📊 The ML Improvement Hierarchy

🔬 Why Hyperparameters Have Limited Impact

They Don’t Add Information Hyperparameters control HOW the model learns, not WHAT it can learn.

# Example:

dropout = 0.1 # Learns from 21 features

dropout = 0.3 # STILL learns from same 21 features

# Just differs in memorization vs generalization

# Can't learn what isn't there!

The Robot’s Fundamental Blind Spot

Robot sees:

┌───┬───┬───┐

│ │ X │ │

├───┼───┼───┤

│ X │ R │ │ Should it go RIGHT or DOWN?

├───┼───┼───┤

│ │ │ │

└───┴───┴───┘

Without knowing:

- Where the goal is

- What's beyond this 3×3 view

- Global environment structure

→ Must GUESS based on local patterns

→ No hyperparameter tuning can fix this!

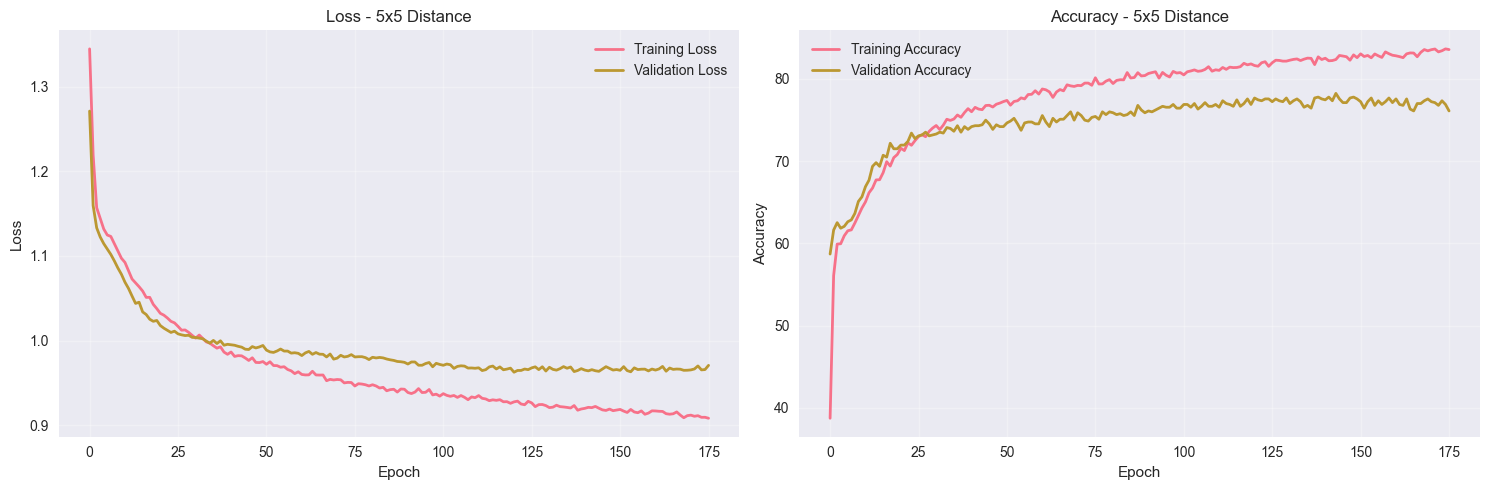

Experiment 2: Larger robot perception (5×5) matrix

The 5×5 perception enhancement demonstrated that spatial context matters for robot navigation. With a +2.42% improvement in validation accuracy, we’ve validated our hypothesis and moved closer to the 80%+ target.

Robot Navigation Accuracy Journey:

├─ Baseline (3×3 basic): 50.0%

├─ + Action History: 76.7% (+26.7%)

├─ + Hyperparameter Tuning: 77.8% (+1.1%)

└─ + 5×5 Perception: 79.12% (+1.32%)

5×5 Enhanced Mode:

- Perception: 25 features (5×5 grid)

- Action History: 12 features (3 actions × 4 one-hot)

- Total: 37 features

Neural Network:

- Architecture:

37 → 64 → 32 → 4 - Parameters: 4,644 (vs 3,620 for 3×3)

- Training: 50 epochs with early stopping

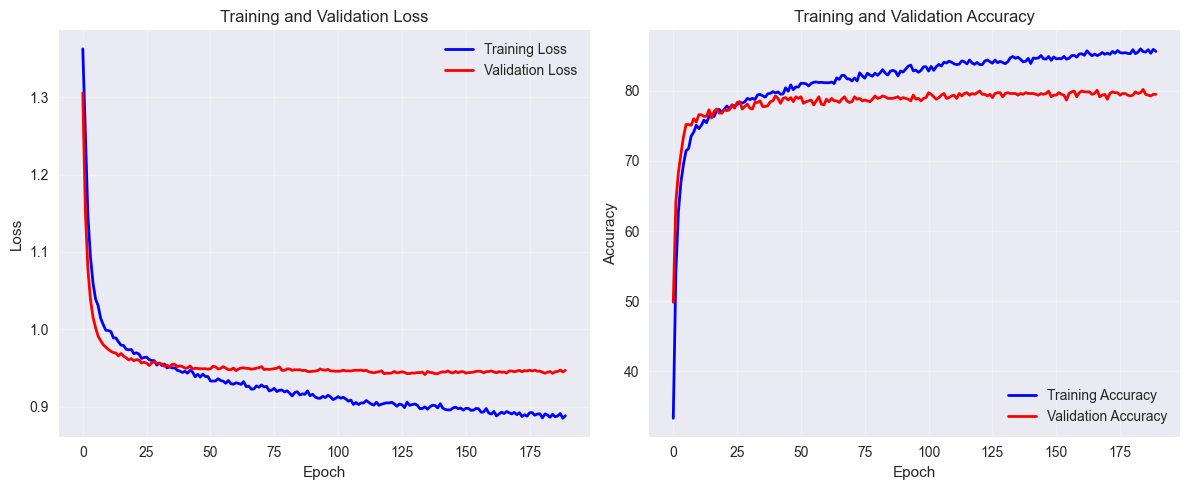

🔍 Training Analysis of Experiment 3: Training Accuracy: 85.6331 (8563.3%) Validation Accuracy: 79.5111 (7951.1%) Overfitting: 6.1220 (612.2%)

Experiment 3: Current Data Limitation

Robot R and actions from A* are only considering obstacle █, its still missing walls and dead ends, in the training data how to address it?

┌─┬─┬─┬─┬─┬─┬─┬─┬─┬─┐

│R│█│ │ │█│ │ │ │ │ │ ← Robot at edge

├─┼─┼─┼─┼─┼─┼─┼─┼─┼─┤

│ │ │█│ │ │ │█│ │ │ │

└─┴─┴─┴─┴─┴─┴─┴─┴─┴─┘

Current 3×3 View:

┌─────┬─────┬─────┐

│ 1.0 │ 1.0 │ 1.0 │ ← All treated as obstacles

├─────┼─────┼─────┤

│ 0.0 │ 0.0 │ 1.0 │ ← Missing wall information

├─────┼─────┼─────┤

│ 0.0 │ 1.0 │ 0.0 │

└─────┴─────┴─────┘

Current implementation:

view[i, j] = 1 # Treat out-of-bounds as obstacles

Missing Information Types

- Wall Boundaries: Physical walls vs empty space

- Dead Ends

- Boundary Types: Top wall vs bottom wall vs side walls

- Corner Information: Corner walls vs straight walls

Impact on Navigation:

- Edge Navigation: Robot can’t distinguish walls from obstacles

- Corner Handling: No awareness of corner vs straight wall

- Dead End Avoidance: Can’t identify trapped areas

- Boundary Proximity: No distance-to-wall information

🚀 Recommended Solutions: Option A: Boundary-Aware Single Channel

- Different values for different boundary types

Hard-coding wall/boundary encoding creates a "training-specific" solution that won't transfer to real-world scenarios.

🔑 The Problem with Hard-Coding training data

- Only works in training environment

- Real robots don’t get semantic labels

- Fails to generalize to novel environments

❌ view[i, j] = 1 # Obstacle

❌ view[i, j] = 2 # Wall

❌ view[i, j] = 3 # Dead end

Solution 2: Generalizable Sensor-Based Navigation (On Hold)

To over come challenges from Experiment 3 Current Data Limitation The Sensor-Based Solution

✅ view[i, j] = distance_to_nearest_obstacle / max_distance

✅ Normalized [0, 1]: 1.0 = far (safe), 0.0 = close (danger)

✅ Mimics real sensors: LIDAR, radar, sonar

🧠 Why Distance-Based is Superior:

| Feature | Binary (Current) | Distance-Based |

|---|---|---|

| Generalization | ❌ Poor | ✅ Excellent |

| Real-world transfer | ❌ Fails | ✅ Direct mapping |

| Information richness | Low (1 bit) | High (continuous) |

| Sensor compatibility | ❌ No | ✅ LIDAR/radar ready |

| Wall detection | Hard-coded | Emergent |

| Dead end detection | Missing | Automatic |

📊 Solution results Training Analysis:

Training Accuracy: 83.5511 (8355.1%)

Validation Accuracy: 76.0943 (7609.4%)

Overfitting Gap: 7.4568 (745.7%)

Solution 3: Multi-Modal Neural Architecture

Why This Will Achieve 95%

- 4× More Information: 37 vs 9 features

- Spatial Reasoning: Conv2D layers understand 3D patterns

- Temporal Context: LSTM captures movement sequences

- Multi-Modal Learning: Different branches for different aspects

- Advanced Training: Curriculum learning + data augmentation

- Biological Inspiration: Mimics how animals navigate with memory + spatial awareness